My Meeting With Claude Shannon, Father of the Information Age

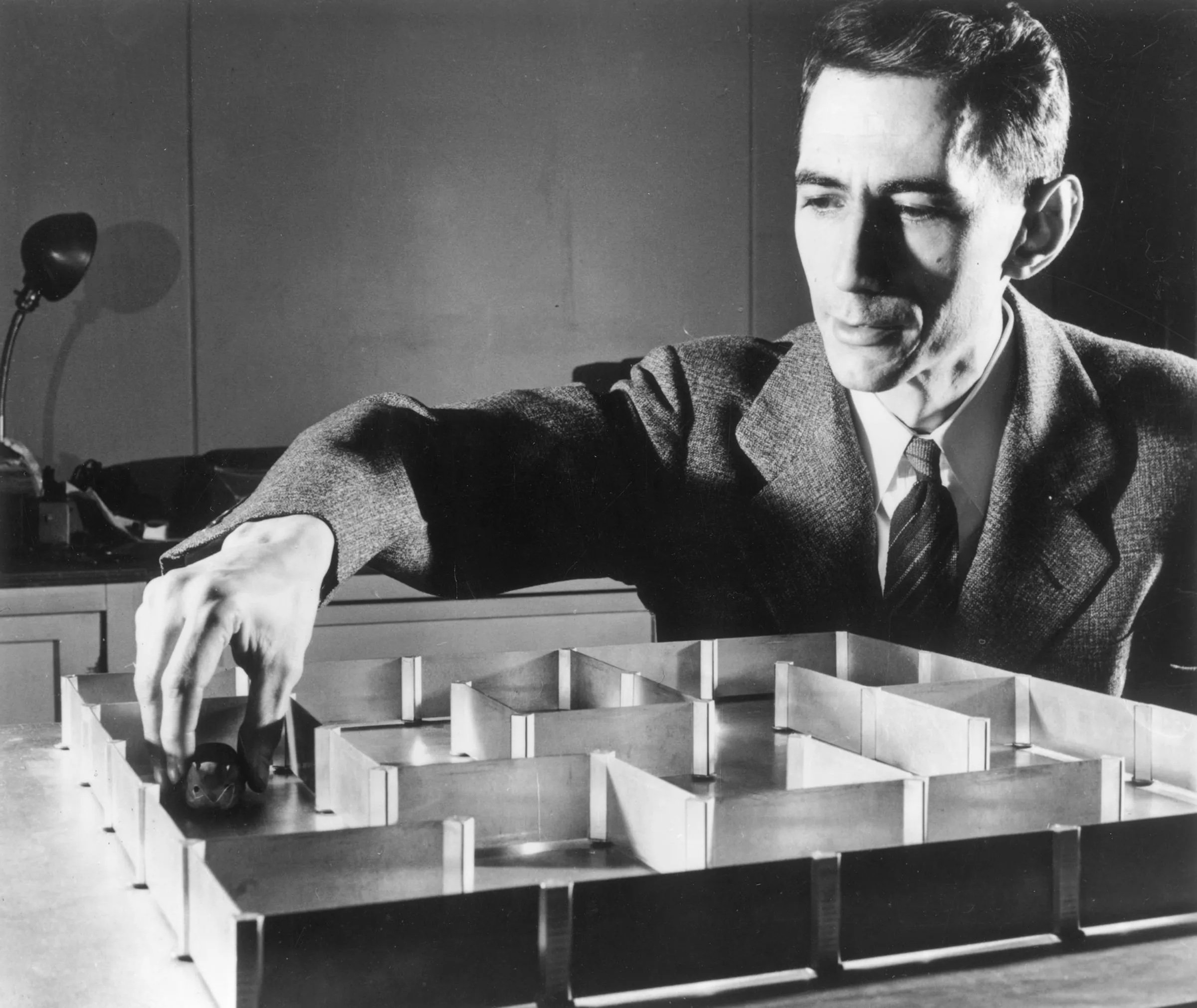

In the early 1950s Claude Shannon designed a mechanical mouse named “Theseus” that could navigate a maze in an early demonstration of artificial intelligence. Source: Wikipedia.

June 29, 2023. No scientist has an impact-to-fame ratio greater than Claude Elwood Shannon, the inventor of information theory, who died in 2001 at the age of 84. Information theory underpins all our digital technologies, including the chatbots that have gotten us so excited lately. You can see Shannon’s ideas glinting within the “it from bit” interpretation of quantum mechanics; the “conservation of information” principle of physics (which implies “conservation of ignorance”); and the integrated information theory model of consciousness. I profiled Shannon in Scientific American in 1990 after visiting him at his home outside Boston. Below is an edited version of that profile followed by excerpts from our conversation. —John Horgan

Claude Shannon can't sit still. We’re in the living room of his home north of Boston, an edifice called Entropy House, and I’m trying to get him to recall how he came up with information theory. Shannon, who is a boyish 73, with a shy grin and snowy hair, is tired of dwelling on his past. He wants to show me his gadgets.

Over the mild protests of his wife, Betty, he leaps from his chair and disappears into another room. When I catch up with him, he proudly shows me his seven chess-playing machines, gasoline-powered pogo-stick, hundred-bladed jackknife, two-seated unicycle and countless other marvels.

Some of his personal creations--such as a mechanical mouse that navigates a maze, a juggling W. C. Fields mannequin and a computer that calculates in Roman numerals--are dusty and in disrepair. But Shannon seems as delighted with his toys as a 10-year-old on Christmas morning.

Is this the man who, at Bell Labs in 1948, wrote “A Mathematical Theory of Communication,” the Magna Carta of the digital age? Whose work is described as the greatest “in the annals of technological thought” by Bell Labs executive Robert Lucky?

Yes. The inventor of information theory also invented a rocket-powered Frisbee and a theory of juggling, and he is still remembered at Bell Labs for juggling while riding a unicycle through the halls. “I’ve always pursued my interests without much regard for financial value or value to the world,” Shannon says cheerfully. “I’ve spent lots of time on totally useless things.”

Shannon’s delight in mathematical abstractions and gadgetry emerged during his childhood in Michigan, where he was born in 1916. He played with radio kits and erector sets and enjoyed solving mathematical puzzles. “I was always interested, even as a boy, in cryptography and things of that sort,” Shannon says. One of his favorite stories was “The Gold Bug,” an Edgar Allan Poe mystery about a mysterious encrypted map.

As an undergraduate at the University of Michigan, Shannon majored in mathematics and electrical engineering. In his MIT master’s thesis, he showed how an algebra invented by George Boole—which deals with such concepts as “if X or Y happens but not Z, then Q results”—could represent the workings of switches and relays in electronic circuits.

The implications of the paper were profound: Circuit designs could be tested mathematically before they were built rather than through tedious trial and error. Engineers now routinely design computer hardware and software, telephone networks and other complex systems with the aid of Boolean algebra. ("I've always loved that word, Boolean," Shannon says.)

After getting his doctorate at MIT, Shannon went to Bell Laboratories in 1941. During World War II, he helped develop encryption systems, which inspired his theory of communication. Just as codes protect information from prying eyes, he realized, so they can shield it from static and other forms of interference. The codes could also be used to package information more efficiently.

“My first thinking about [information theory],” Shannon says, “was how you best improve information transmission over a noisy channel. This was a specific problem, where you’re thinking about a telegraph system or a telephone system. But when you get to thinking about that, you begin to generalize in your head about all these broader applications.”

The centerpiece of his 1948 paper is his definition of information. Sidestepping questions about meaning (which his theory “can’t and wasn’t intended to address”), he demonstrates that information is a measurable commodity. Roughly speaking, a message’s information is proportional to its improbability--or its capacity to surprise an observer.

Shannon also relates information to entropy, which in thermodynamics denotes a system’s randomness, or “shuffledness,” as some physicists put it. Shannon defines the basic unit of information--which a Bell Labs colleague dubbed a binary unit or “bit”--as a message representing one of two states. One can encode lots of information in few bits, just as in the old game “Twenty Questions” one can quickly zero in on the correct answer through deft questioning.

Shannon shows that any given communications channel has a maximum capacity for reliably transmitting information. Actually, he shows that although one can approach this maximum through clever coding, one can never quite reach it. The maximum has come to be known as the Shannon limit.

Shannon’s 1948 paper established how to calculate the Shannon limit—but not how to approach it. Shannon and others took up that challenge later. The first step was to eliminate redundancy from the message. Just as a laconic Romeo can get his message across with a mere “i lv u,” a good code compresses information into a compact package. A so-called error-correction code adds just enough redundancy to ensure that the stripped-down message is not obscured by noise.

Shannon’s ideas were too prescient to have an immediate impact. Not until the early 1970s did high-speed integrated circuits and other advances allow engineers fully to exploit information theory. Today Shannon’s insights help shape virtually all technologies that store, process, or transmit information in digital form.

Like quantum mechanics and relativity, information theory has captivated audiences beyond the one for which it was intended. Researchers in physics, linguistics, psychology, economics, biology, even music and the arts sought to apply information theory in their disciplines. In 1958, a technical journal published an editorial deploring this trend: “Information Theory, Photosynthesis, and Religion.”

Applying information theory to biological systems is not so far-fetched, according to Shannon. “The nervous system is a complex communication system, and it processes information in complicated ways,” he says. Asked whether he thinks machines can “think,” he replies: “You bet. I’m a machine, and you’re a machine, and we both think, don’t we?”

In 1950 he wrote an article for Scientific American on chess-playing machines, and he remains fascinated by the field of artificial intelligence. Computers are still “not up to the human level yet” in terms of raw information processing. Simply replicating human vision in a machine remains a formidable task. But “it is certainly plausible to me that in a few decades machines will be beyond humans.”

In recent years, Shannon’s great obsession has been juggling. He has built several juggling machines and devised a theory of juggling: If B equals the number of balls, H the number of hands, D the time each ball spends in a hand, F the time of flight of each ball, and E the time each hand is empty, then B/H = (D + F)/(D + E). (Unfortunately, the theory could not help Shannon juggle more than four balls at once.)

After leaving Bell Labs in 1956 for MIT, Shannon published little on information theory. Some former Bell colleagues suggest that he tired of the field he created. Shannon denies that claim. He had become interested in other topics, like artificial intelligence, he says. He continued working on information theory, but he considers most of his results unworthy of publication. “Most great mathematicians have done their finest work when they were young,” he observes.

Decades ago, Shannon stopped attending information-theory meetings. Colleagues say he suffered from severe stage fright. But in 1985 he made an unexpected appearance at a conference in Brighton, England, and the meeting’s organizers persuaded him to speak at a dinner banquet. He talked for a few minutes. Then, fearing he was boring his audience, he pulled three balls out of his pockets and began juggling. The audience cheered and lined up for autographs. One engineer recalled, “It was as if Newton had showed up at a physics conference.”

EXCERPTS FROM SHANNON INTERVIEW, NOVEMBER 2, 1989

Horgan: When you started working on information theory, did you have a specific goal in mind?

Shannon: My first thinking about it was: How do you best forward transmissions in a noisy channel, something like that. That kind of a specific problem, where you think of them in a telegraph system or telephone system. But when I begin thinking about that, you begin to generalize in your head all of the broader applications. So almost all of the time, I was thinking about them as well. I would often phrase things in terms of a very simplified channel. Yes or no’s or something like that. So I had all these feelings of generality very early.

Horgan: I read that John Von Neumann suggested you should use the word "entropy" as a measure of information because that no one understands entropy and so you can win arguments about your theory.

Shannon: It sounds like the kind of remark I might have made as a joke… Crudely speaking, the amount of information is how much chaos is there in the system. But the mathematics comes out right, so to speak. The amount of information measured by entropy determines how much capacity to leave in the channel.

Horgan: Were you surprised when people tried to use information theory to analyze the nervous system?

Shannon: That’s not so strange if you make the case that the nervous system is a complex communication system, which processes information in complicated ways… Mostly what I wrote about was communicating from one point to another, but I also spent a lot of time in transforming information from one form to another, combining information in complicated ways, which the brain does and the computers do now. So all of these things are kind of a generalization of information theory, where you are talking about working to change its form one way or another and combine with others, in contrast to getting it from one place to another. So, yes all those things I see as a kind of a broadening of information theory. Maybe it shouldn’t be called the information theory. Maybe it should be called "transformation of information" or something like that.

Horgan: Scientific American had a special issue on communications in 1972. John Pierce [an electrical engineer and friend of Shannon’s] said in the introductory article that your work could be extended to include meaning [in language].

Shannon: Meaning is a pretty hard thing to get a grip on… In mathematics and physics and science and so on, things do have a meaning, about how they are related to the outside world. But usually they deal with very measurable quantities, whereas most of our talk between humans is not so measurable. It’s a very broad thing which brings up all kinds of emotions in your head when you hear the words. So, I don’t think it is all that easy to encompass that in a mathematical form.

Horgan: People have told me that by the late 1950s, you got tired of information theory.

Shannon: It’s not that I was tired of it. It’s that I was working on a different thing... I was playing around with machines to do computations. That’s been more of my interest than information theory itself. The intelligent-machine idea.

Horgan: Do you worry that machines will take over some of our functions?

Shannon: The machines may be able to solve a lot of problems we have wondered about and reduce our menial labor problem… If you are talking about the machines taking over, I’m not really worried about that. I think so long as we build them, they won’t take over.

Horgan: Did you ever feel any pressure on you, at Bell Labs, to work on something more practical?

Shannon: No. I’ve always pursued my interests without much regard for financial value or value to the world. I’ve been more interested in whether a problem is exciting than what it will do. … I’ve spent lots of time on totally useless things.

Further Reading:

The concept of information riddles my free online books Mind-Body Problems and My Quantum Experiment. See also my profile of physicist John Wheeler, inventor of the “it from bit.”